Detecting conflicts

Part of the latest MySQL Cluster Development Milestone Release (MySQL Cluster 7.2.1 – select the “Development Release” tab at http://dev.mysql.com/downloads/cluster/#downloads) is a couple of enhancements to the conflict detection and resolution mechanism for active-active (multi-master) replication. While MySQL Cluster has had conflict detection for years it has now been made much more complete and a lot easier to use:

- No changes needed to the application schema

- Entire conflicting transaction is rolled back together with any dependent transactions

The focus of this post will be to step through how to use this feature – while it will also attempt to explain how it works at a high level, you should refer to the following posts for the design details and philosophy: Eventual consistency with MySQL & Eventual Consistency – detecting conflicts.

What is a conflict?

MySQL Cluster allows bi-directional replication between two (or more) clusters. Replication within each cluster is synchronous but between clusters it is asynchronous which means the following scenario is possible:

Conflict with asynchronous replication

| Site A |

Replication |

Site B |

| x == 10 |

|

x == 10 |

| x = 11 |

|

x = 20 |

|

— x=11 –> |

x == 11 |

| x==20 |

<– x=20 — |

|

In this example a value (column for a row in a table) is set to 11 on site A and the change is queued for replication to site B. In the mean time, an application sets the value to 20 on site B and that change is queued for replication to site A. Once both sites have received and applied the replicated change from the other cluster site A contains the value 20 while site B contains 11 – in other words the databases are now inconsistent.

How MySQL Cluster 7.2 implements eventual consistency

There are two phases to establishing consistency between both clusters after an inconsistency has been introduced:

- Detect that a conflict has happened

- Resolve the inconsistency

Detecting the conflict

The following animation illustrates how MySQL Cluster 7.2 detects that an inconsistency has been introduced by the asynchronous, active-active replication:

Detecting conflicts

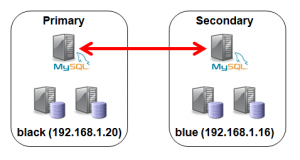

While we typically consider the 2 clusters in an active-active replication configuration to be peers, in this case we designate one to be the primary and the other the secondary. Reads and writes can still be sent to either cluster but it is the responsibility of the primary to identify that a conflict has arisen and then remove the inconsistency.

A logical clock is used to identify (in relative terms) when a change is made on the primary – for those who know something of the MySQL Cluster internals, we use the index of the Global Checkpoint that the update is contained in. For all tables that have this feature turned on, an extra, hidden column is automatically added on the primary – this represents the value of the logical clock when the change was made.

Once the change has been applied on the primary, there is a “window of conflict” for the effected row(s) during which if a different change is made to the same row(s) on the secondary then there will be an inconsistency. Once the slave on the secondary has applied the change from the primary, it will send a replication event back to the slave on the primary, containing the primary’s clock value associated with the changes that have just been applied on the secondary. (Remember that the clock is actually the Global Checkpoint Index and so this feature is sometimes referred to as Reflected GCI). Once the slave on the primary has received this event, it knows that all changes tagged with a clock value no later than the reflected GCI are now safe – the window of conflict has closed.

If an application modifies this same row on the secondary before the replication event from the primary was applied then it will send an associated replication event to the slave on the primary before it reflects the new GCI. The slave on the primary will process this replication event and compare the clock value recorded with the effected rows with the latest reflected GCI; as the clock value for the conflicting row is higher the primary recognises that a conflict has occured and will launch the algorithm to resolve the inconsistency.

Resolving the inconsistency

In earlier releases of MySQL Cluster (or if choosing to use the original algorithm in MySQL Cluster 7.2) you had a choice of simply flagging the primary key of the conflicting rows or backing out one of the changes to the conflicting rows. Using the new NDB$EPOCH_TRANS function, the primary will overwrite the data in the secondary for the effected row(s) and any other rows that were updated in the same transaction (even if they are in tables for which conflict detection has not been enabled).

In fact the algorithm goes a step further and if there were subsequent transactions on the secondary that wrote to the conflicting rows then all of the changes from those dependent transactions on the secondary will be backed-out as well.

Worked example

In this section, we step through how to setup the active-active replication, with the new conflict detection/resolution feature enabled and then test it out by manually introducing some conflicting transations.

Set-up MySQL Clusters and basic active-acative replication

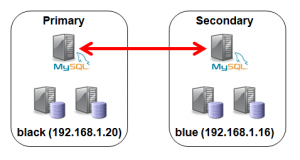

Hosts used for replication

To keep things simple, just two hosts are used; “black” will contain all nodes for the primary cluster and “blue” will contain all nodes for the secondary. As an extra simplification a single MySQL Server in each cluster will act as both the master and the slave.

This post will quickly show the configuration files and steps to get the 2 clusters up and running but for a better understanding of these steps you can refer to Deploying MySQL Cluster over multiple hosts.

config.ini (black):

[ndb_mgmd]

hostname=localhost

datadir=/home/billy/my_cluster/data

nodeid=1

[ndbd default]

noofreplicas=2

datadir=/home/billy/my_cluster/data

[ndbd]

hostname=localhost

nodeid=3

[ndbd]

hostname=localhost

nodeid=4

[mysqld]

nodeid=50

config.ini (blue):

[ndb_mgmd]

hostname=localhost

datadir=/home/billy/my_cluster/data

nodeid=1

[ndbd default]

noofreplicas=2

datadir=/home/billy/my_cluster/data

[ndbd]

hostname=localhost

nodeid=3

[ndbd]

hostname=localhost

nodeid=4

[mysqld]

nodeid=50

my.cnf for primary cluster (black):

[mysqld]

ndbcluster

datadir=/home/billy/my_cluster/data

server-id=8

log-bin=black-bin.log

ndb-log-transaction-id=1

binlog-format=ROW

ndb-log-update-as-write=0

my.cnf for secondary cluster (blue):

[mysqld]

ndbcluster

datadir=/home/billy/my_cluster/data

server-id=9

log-bin=blue-bin.log

ndb-log-transaction-id=1

binlog-format=ROW

ndb-log-update-as-write=0

ndb-log-apply-status=1

Note that the options set in the my.cnf file are very important – if any of these are missing then things will not work as expected.

Start up primary cluster (black):

billy@black:~/my_cluster$ ndb_mgmd --initial

-f conf/config.ini --configdir=/home/billy/my_cluster/conf/

billy@black:~/my_cluster$ ndbd --initial

billy@black:~/my_cluster$ ndbd --initial

billy@black:~/my_cluster$ ndb_mgm -e show # wait for ndbds to finish starting

Connected to Management Server at: localhost:1186

Cluster Configuration

---------------------

[ndbd(NDB)] 2 node(s)

id=3 @127.0.0.1 (mysql-5.5.15 ndb-7.2.1, Nodegroup: 0, Master)

id=4 @127.0.0.1 (mysql-5.5.15 ndb-7.2.1, Nodegroup: 0)

[ndb_mgmd(MGM)] 1 node(s)

id=1 @127.0.0.1 (mysql-5.5.15 ndb-7.2.1)

[mysqld(API)] 3 node(s)

id=50 (not connected, accepting connect from any host)

billy@black:~/my_cluster$ mysqld --defaults-file=conf/my.cnf &

Start up secondary cluster (blue):

billy@blue:~/my_cluster$ ndb_mgmd --initial

-f conf/config.ini --configdir=/home/billy/my_cluster/conf/

billy@blue:~/my_cluster$ ndbd --initial

billy@blue:~/my_cluster$ ndbd --initial

billy@blue:~/my_cluster$ ndb_mgm -e show # wait for ndbds to finish starting

Connected to Management Server at: localhost:1186

Cluster Configuration

---------------------

[ndbd(NDB)] 2 node(s)

id=3 @127.0.0.1 (mysql-5.5.15 ndb-7.2.1, Nodegroup: 0, Master)

id=4 @127.0.0.1 (mysql-5.5.15 ndb-7.2.1, Nodegroup: 0)

[ndb_mgmd(MGM)] 1 node(s)

id=1 @127.0.0.1 (mysql-5.5.15 ndb-7.2.1)

[mysqld(API)] 3 node(s)

id=50 (not connected, accepting connect from any host)

billy@blue:~/my_cluster$ mysqld --defaults-file=conf/my.cnf &

Both clusters are now running and replication can be activated for both sites:

billy@black:~/my_cluster$ mysql -u root --prompt="black-mysql> "

black-mysql> CREATE USER repl_user@192.168.1.16;

black-mysql> GRANT REPLICATION SLAVE ON *.* TO repl_user@192.168.1.16

IDENTIFIED BY 'billy';

billy@blue:~/my_cluster$ mysql -u root --prompt="blue-mysql> "

blue-mysql> CREATE USER repl_user@192.168.1.20;

blue-mysql> GRANT REPLICATION SLAVE ON *.* TO repl_user@192.168.1.20

IDENTIFIED BY 'billy';

blue-mysql> CHANGE MASTER TO MASTER_HOST='192.168.1.20',

-> MASTER_USER='repl_user',

-> MASTER_PASSWORD='billy',

-> MASTER_LOG_FILE='',

-> MASTER_LOG_POS=4;

blue-mysql> START SLAVE;

black-mysql> CHANGE MASTER TO MASTER_HOST='192.168.1.16',

-> MASTER_USER='repl_user',

-> MASTER_PASSWORD='billy',

-> MASTER_LOG_FILE='',

-> MASTER_LOG_POS=4;

black-mysql> START SLAVE;

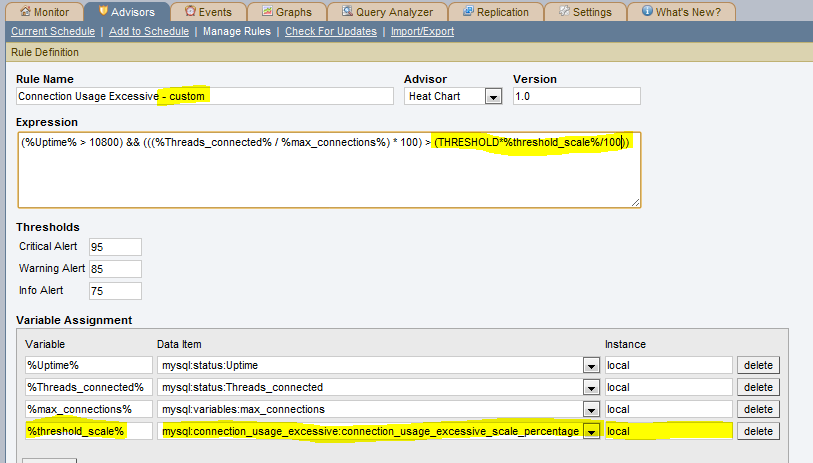

Set up enhanced conflict detection & resolution

The first step is to identify the tables that need conflict detection enabling. Each of those tables then has to have an entry in the mysql.ndb_replication table where they’re tagged as using the new NDB$EPOCH_TRANS() function – you could also choose to use NDB$EPOCH(), in which case only the changes to conflicting rows will be backed-out rather than the full transactions. A few things to note:

- This must be done before creating the application tables themselves

- Should only be done on the primary

- By default the table doesn’t exist and so the very first step is to create it

black-mysql> CREATE TABLE mysql.ndb_replication (

-> db VARBINARY(63),

-> table_name VARBINARY(63),

-> server_id INT UNSIGNED,

-> binlog_type INT UNSIGNED,

-> conflict_fn VARBINARY(128),

-> PRIMARY KEY USING HASH (db, table_name, server_id)

-> ) ENGINE=NDB

-> PARTITION BY KEY(db,table_name);

black-mysql> REPLACE INTO mysql.ndb_replication VALUES ('clusterdb', 'simple1', 8, 0,

'NDB$EPOCH_TRANS()');

black-mysql> REPLACE INTO mysql.ndb_replication VALUES ('clusterdb', 'simple2', 8, 0,

'NDB$EPOCH_TRANS()');

black-mysql> REPLACE INTO mysql.ndb_replication VALUES ('clusterdb', 'simple3', 8, 0,

'NDB$EPOCH_TRANS()');

For each of these tables you should also create an exceptions table which will record any conflicts that have resulted in changes being rolled back; the format of these tables is rigidly defined and so take care to copy the types exactly; again this only needs doing on the primary:

black-mysql> CREATE DATABASE clusterdb;USE clusterdb;

black-mysql> CREATE TABLE simple1$EX (server_id INT UNSIGNED,

master_server_id INT UNSIGNED, master_epoch BIGINT UNSIGNED,

count INT UNSIGNED, id INT NOT NULL, PRIMARY KEY(server_id,

master_server_id, master_epoch, count)) ENGINE=NDB;

black-mysql> CREATE TABLE simple2$EX (server_id INT UNSIGNED,

master_server_id INT UNSIGNED, master_epoch BIGINT UNSIGNED,

count INT UNSIGNED, id INT NOT NULL, PRIMARY KEY(server_id,

master_server_id, master_epoch, count)) ENGINE=NDB;

black-mysql> CREATE TABLE simple3$EX (server_id INT UNSIGNED,

master_server_id INT UNSIGNED, master_epoch BIGINT UNSIGNED,

count INT UNSIGNED, id INT NOT NULL, PRIMARY KEY(server_id,

master_server_id, master_epoch, count)) ENGINE=NDB;

Finally, the application tables themselves can be created (this only needs doing on the primary as they’ll be replicated to the secondary):

black-mysql> CREATE TABLE simple1 (id INT NOT NULL PRIMARY KEY, value INT) ENGINE=ndb;

black-mysql> CREATE TABLE simple2 (id INT NOT NULL PRIMARY KEY, value INT) ENGINE=ndb;

black-mysql> CREATE TABLE simple3 (id INT NOT NULL PRIMARY KEY, value INT) ENGINE=ndb;

Everything is now set up and the new configuration can be tested to ensure that conflicts are detected and the correct updates are rolled back.

Testing enhanced active-active replication and conflict detection

The first step is to add some data to our new tables (note that at this point replication is running and so they only need to be created on the primary) and then update 1 row to make sure that it is replicated to the secondary:

black-mysql> REPLACE INTO simple1 VALUES (1,10);

black-mysql> REPLACE INTO simple2 VALUES (1,10);

black-mysql> REPLACE INTO simple3 VALUES (1,10);

black-mysql> UPDATE simple1 SET value=12 WHERE id=1;

blue-mysql> USE clusterdb;

blue-mysql> SELECT * FROM simple1;

+----+-------+

| id | value |

+----+-------+

| 1 | 12 |

+----+-------+

It is important that the NDB$EPOCH_TRANS() function rolls back any transactions on the secondary that involve a conflict (as well as subsequent, dependent transactions that modify the same rows); to do this manually the simplest approach is to stop the slave IO thread on the secondary thread in order to increase the size of the window of conflict (which is otherwise very short). Once the slave IO thread has been stopped a change is made to table simple1 on the primary and then the secondary makes a (conflicting) change to the same row as well as making a change to table simple2 in the same transaction. A second transaction on the primary will change a row in simple3 – as it doesn’t touch any rows that have been involved in a conflict then that change should stand.

blue-mysql> STOP SLAVE IO_THREAD;

black-mysql> UPDATE simple1 SET value=13 WHERE id=1;

blue-mysql> BEGIN; # conflicting transaction

blue-mysql> UPDATE simple1 SET value=20 WHERE id=1;

blue-mysql> UPDATE simple2 SET value=20 WHERE id=1;

blue-mysql> COMMIT;

blue-mysql> UPDATE simple3 SET value=20 WHERE id=1; # non conflicting

blue-mysql> SELECT * FROM simple1;

+----+-------+

| id | value |

+----+-------+

| 1 | 20 |

+----+-------+

blue-mysql> SELECT * FROM simple2;

+----+-------+

| id | value |

+----+-------+

| 1 | 20 |

+----+-------+

blue-mysql> SELECT * FROM simple3;

+----+-------+

| id | value |

+----+-------+

| 1 | 20 |

+----+-------+

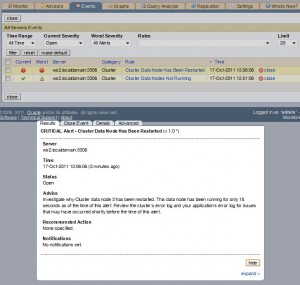

If you now check the exception tables then you can see that the primary (black) has received the changes from the secondary (blue) and because the first transaction updated the same row in simple1 during its window of conflict it has recorded that the change needs to be rolled back – this will happen as soon as the replication thread is restarted on the secondary:

black-mysql> SELECT * FROM simple1$EX;

+-----------+------------------+---------------+-------+----+

| server_id | master_server_id | master_epoch | count | id |

+-----------+------------------+---------------+-------+----+

| 8 | 9 | 1494648619009 | 3 | 1 |

+-----------+------------------+---------------+-------+----+

black-mysql> SELECT * FROM simple2$EX;

+-----------+------------------+---------------+-------+----+

| server_id | master_server_id | master_epoch | count | id |

+-----------+------------------+---------------+-------+----+

| 8 | 9 | 1494648619009 | 1 | 1 |

+-----------+------------------+---------------+-------+----+

black-mysql> SELECT * FROM simple3$EX;

Empty set (0.05 sec)

blue-mysql> START SLAVE IO_THREAD;

blue-mysql> SELECT * FROM simple1;

+----+-------+

| id | value |

+----+-------+

| 1 | 13 |

+----+-------+

blue-mysql> SELECT * FROM simple2;

+----+-------+

| id | value |

+----+-------+

| 1 | 10 |

+----+-------+

blue-mysql> SELECT * FROM simple3;

+----+-------+

| id | value |

+----+-------+

| 1 | 20 |

+----+-------+

These are the results we expect – simple1 has the value set by the primary with the subsequent change on the secondary rolled back; simple2 was not updated by the primary but the change on the secondary was rolled back as it was made in the same transaction as the conflicting update to simple1. The change on the secondary to simple3 has survived as it was made outside of any conflicting transaction and the change was not dependent on any conflicting changes. Finally just confirm that the data is identical on the primary:

black-mysql> SELECT * FROM simple1;

+----+-------+

| id | value |

+----+-------+

| 1 | 13 |

+----+-------+

black-mysql> SELECT * FROM simple2;

+----+-------+

| id | value |

+----+-------+

| 1 | 10 |

+----+-------+

black-mysql> SELECT * FROM simple3;

+----+-------+

| id | value |

+----+-------+

| 1 | 20 |

+----+-------+

Statistics are provided on the primary that record that 1 conflict has been detected, effecting 1 transaction and that it resulted in 2 row changes being rolled back:

black-mysql> SHOW STATUS LIKE 'ndb_conflict%';

+------------------------------------------+-------+

| Variable_name | Value |

+------------------------------------------+-------+

| Ndb_conflict_fn_max | 0 |

| Ndb_conflict_fn_old | 0 |

| Ndb_conflict_fn_max_del_win | 0 |

| Ndb_conflict_fn_epoch | 0 |

| Ndb_conflict_fn_epoch_trans | 1 |

| Ndb_conflict_trans_row_conflict_count | 1 |

| Ndb_conflict_trans_row_reject_count | 2 |

| Ndb_conflict_trans_reject_count | 1 |

| Ndb_conflict_trans_detect_iter_count | 1 |

| Ndb_conflict_trans_conflict_commit_count | 1 |

+------------------------------------------+-------+

We’re anxious to get feedback on this feature and so please go ahead and download MySQL Cluster 7.2.1 and let us know how you get on through the comments for this post.