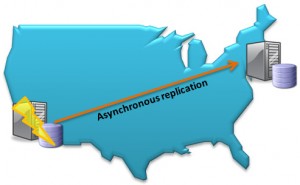

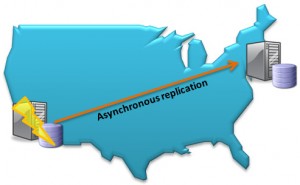

Asynchronous Replication for High Availability

MySQL replication is often positioned as a solution to provide extra throughput for your database (especially when dealing with high numbers of read operations). What tends to be overlooked is how it can be used to provide high availability (HA) – no matter how much redundancy you have at a local level, your system remains at risk from a single catastrophic failure – loss of power, earthquake, terrorist attack etc. By using MySQL asynchronous replication to maintain a geographically remote copy of that data, service can be maintained through any single site failure.

As this replication is asynchronous, there are no low latency demands on the network and so the two sites can be thousands of miles apart while utilising low-cost connections.

This article provides a simple example of how to set up asynchronous replication between 2 MySQL databases in order to provide a Highly Available solution. First of all, it will be 2 databases where the tables will be stored in the MyISAM storage engine and then between 2 MySQL Cluster databases where I also configure it for Multi-master replication where changes can be made at either site.

Subsequent articles will build on this to show:

- Collision detection and resolution when using MySQL Cluster multi-master asynchronous replication

- Introducing asynchronous replication to a running MySQL Cluster database

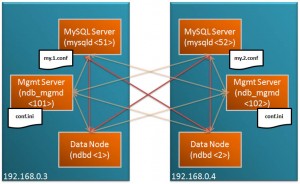

Throughout this article, two machines are used: ws1 (192.168.0.3) and ws2 (192.168.0.4). ws1 will be set up as the master and ws2 as the slave (in the multi-master configuration, both act as both master and slave).

Setting up replication for non-Cluster databases

Replication is performed from one MySQL Server to another; the master makes the changes available and then one or more other Servers pick up those changes and apply them to their own databases. In this example, both databases will store the table data using the same storage engine (MyISAM) but it’s possible to mix and match (for example, take a look at MySQL Cluster – flexibility of replication). As this is intended as a simple introduction to replication, I’m keeping life simple by assuming that this is all being set up before the database goes into production – if that isn’t the case for you and you need to cope with existing data then check out the MySQL documentation or subsequent articles on this site.

The my.cnf files can be set up as normal but the one for the MySQL Server that will act as the Master needs to have binary-logging enabled. Also, each of the server needs to have a unique server-id. Here are the my.cnf files used for this example:

my.cnf (Master)

[mysqld]

datadir=/home/billy/mysql/myisam/data

basedir=/usr/local/mysql

port=3306

server-id=1

log-bin

my.cnf (Slave)

[mysqld]

datadir=/home/billy/mysql/myisam/data

basedir=/usr/local/mysql

port=3306

server-id=2

Fire up the Master MySQL Server:

[billy@ws1 myisam]$ mysqld --defaults-file=my.cnf&

The slave needs a userid/password in order to access the master server – best practice is to create a dedicated user with just the required privileges:

[billy@ws1 myisam]$ mysql -u root

Welcome to the MySQL monitor. Commands end with ; or g.

Your MySQL connection id is 1

Server version: 5.1.34-ndb-7.0.6-cluster-gpl-log MySQL Cluster Server (GPL)

Type 'help;' or 'h' for help. Type 'c' to clear the current input statement.

mysql> grant replication slave on *.* to 'repl'@'192.168.0.4'

-> identified by 'repl';

Query OK, 0 rows affected (0.00 sec)

The slave can now be told to start processing the replication data that will be staged by the master server:

[billy@ws2 myisam]$ mysqld --defaults-file=my.cnf&

[billy@ws2 myisam]$ mysql -u root

Welcome to the MySQL monitor. Commands end with ; or g.

Your MySQL connection id is 2

Server version: 5.1.34-ndb-7.0.6-cluster-gpl MySQL Cluster Server (GPL)

Type 'help;' or 'h' for help. Type 'c' to clear the current input statement.

mysql> CHANGE MASTER TO

-> MASTER_HOST='192.168.0.3',

-> MASTER_PORT=3306,

-> MASTER_USER='repl',

-> MASTER_PASSWORD='repl',

-> MASTER_LOG_FILE='',

-> MASTER_LOG_POS=4;

090803 15:48:09 [Warning] Neither --relay-log nor --relay-log-index were used; so replication may break when this MySQL server acts as a slave and has his hostname changed!! Please use '--relay-log=ws2-relay-bin' to avoid this problem.

Query OK, 0 rows affected (0.01 sec)

mysql> start slave;

090803 15:51:24 [Note] Slave SQL thread initialized, starting replication in log 'FIRST' at position 0, relay log './ws2-relay-bin.000001' position: 4

Query OK, 0 rows affected (0.00 sec)

090803 15:51:24 [Note] Slave I/O thread: connected to master 'repl@192.168.0.3:3306',replication started in log 'FIRST' at position 4

Now to test that replication is up and running, create a table on the master, add some data and then check that the table and data can be read from the slave:

[billy@ws1 myisam]$ mysql -u root

Welcome to the MySQL monitor. Commands end with ; or g.

Your MySQL connection id is 3

Server version: 5.1.34-ndb-7.0.6-cluster-gpl-log MySQL Cluster Server (GPL)

Type 'help;' or 'h' for help. Type 'c' to clear the current input statement.

mysql> use test

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

mysql> create table numbers (num1 int, num2 int);

Query OK, 0 rows affected (0.00 sec)

mysql> insert into numbers values (1,10),(2,20),(3,30);

Query OK, 3 rows affected (0.00 sec)

Records: 3 Duplicates: 0 Warnings: 0

[billy@ws2 myisam]$ mysql -u root

Welcome to the MySQL monitor. Commands end with ; or g.

Your MySQL connection id is 5

Server version: 5.1.34-ndb-7.0.6-cluster-gpl MySQL Cluster Server (GPL)

Type 'help;' or 'h' for help. Type 'c' to clear the current input statement.

mysql> use test;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

mysql> select * from numbers;

+------+------+

| num1 | num2 |

+------+------+

| 1 | 10 |

| 2 | 20 |

| 3 | 30 |

+------+------+

3 rows in set (0.00 sec)

Multi-Master Replication with MySQL Cluster

Multi-Master Replication for HA with MySQL Cluster

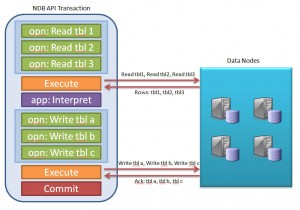

There are a few asynchronous replication capabilities that are unique to MySQL Cluster – one of those is that changes are replicated even if they are made directly to the data nodes using the NDB API, another is that replication can be performed in both directions i.e. multi-master. One of the advantages of this is you can share both read and write operations across both sites so that no capacity is wasted – it also gives you the confidence that either site is sane and ready to take over for the other at any point. You should aim to minimise how often the same rows are modified at the same time on both sites – conflict detection/resolution has been implemented but it can only roll-back the rows changes that conflict with other row changes rather than the full transaction.

It’s important to note that in this article, I’m not talking about the synchronous replication that takes place between data nodes within a single MySQL Cluster site (that happens in parallel and is orthogonal to the asynchronous replication to a remote site).

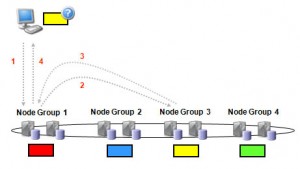

When performing multi-master asynchronous replication between 2 Clusters, 1 (or more) MySQL Servers in each Cluster is nominated as a master and 1 or more as slaves (it can be the same server that takes on both roles and you can have multiple channels set up in case a channel or MySQL Server is lost). Changes made to the data at either site through any of their MySQL Servers (or directly to the data nodes using the NDB API) will be replicated to the other site.

I will focus on setting up the replication, you can refer to Deploying MySQL Cluster over multiple hosts for the steps to configure and run each MySQL Cluster site.

Most of the steps are very similar to those in the first example – the main differences would come when introducing asynchronous replication to a MySQL Cluster instance that already contains data and is up and running (processing updates) which will be covered in a subsequent article.

Binary logging needs to be enabled on the MySQL Server(s) at each site that will act as a replication master:

my1.cnf (Master)

[mysqld]

ndb-nodeid=4

ndbcluster

datadir=/home/billy/mysql/replication/7_0_6/data

basedir=/home/billy/mysql/replication/7_0_6

port=3306

server-id=1

log-bin

my1.cnf (Slave)

[mysqld]

ndb-nodeid=4

ndbcluster

datadir=/home/billy/mysql/replication/7_0_6/data

basedir=/home/billy/mysql/replication/7_0_6

port=3306

server-id=2

log-bin

The MySQL Cluster nodes (including the MySQL Servers (mysqld) from both sites should be started up as normal.

In this case, the replication users should be set up for both Clusters:

[billy@ws1 7_0_6]$ mysqld --defaults-file=my.cnf&

[billy@ws1 7_0_6]$ mysql -u root

Welcome to the MySQL monitor. Commands end with ; or g.

Your MySQL connection id is 2

Server version: 5.1.34-ndb-7.0.6-cluster-gpl-log MySQL Cluster Server (GPL)

Type 'help;' or 'h' for help. Type 'c' to clear the current input statement.

mysql> grant replication slave on *.* to 'repl'@'192.168.0.4'

-> identified by 'repl';

Query OK, 0 rows affected (0.01 sec)

[billy@ws2 7_0_6]$ mysqld --defaults-file=my.cnf&

[billy@ws2 7_0_6]$ mysql -u root

Welcome to the MySQL monitor. Commands end with ; or g.

Your MySQL connection id is 3

Server version: 5.1.34-ndb-7.0.6-cluster-gpl-log MySQL Cluster Server (GPL)

Type 'help;' or 'h' for help. Type 'c' to clear the current input statement.

mysql> grant replication slave on *.* to 'repl'@'192.168.0.3'

-> identified by 'repl';

Query OK, 0 rows affected (0.00 sec)

Replication can then be setup and started on each of the MySQL Servers (those acting as slaves for each Cluster):

[billy@ws1 7_0_6]$ mysql -u root

Welcome to the MySQL monitor. Commands end with ; or g.

Your MySQL connection id is 3

Server version: 5.1.34-ndb-7.0.6-cluster-gpl-log MySQL Cluster Server (GPL)

Type 'help;' or 'h' for help. Type 'c' to clear the current input statement.

mysql> CHANGE MASTER TO

-> MASTER_HOST='192.168.0.4',

-> MASTER_PORT=3306,

-> MASTER_USER='repl',

-> MASTER_PASSWORD='repl',

-> MASTER_LOG_FILE='',

-> MASTER_LOG_POS=4;

090803 17:25:00 [Warning] Neither --relay-log nor --relay-log-index were used; so replication may break when this MySQL server acts as a slave and has his hostname changed!! Please use '--relay-log=ws1-relay-bin' to avoid this problem.

Query OK, 0 rows affected (0.01 sec)

mysql> start slave;

090803 17:27:20 [Note] Slave SQL thread initialized, starting replication in log 'FIRST' at position 0, relay log './ws1-relay-bin.000001' position: 4

Query OK, 0 rows affected (0.02 sec)

090803 17:27:20 [Note] Slave I/O thread: connected to master 'repl@192.168.0.4:3306',replication started in log 'FIRST' at position 4

[billy@ws2 7_0_6]$ mysql -u root

Welcome to the MySQL monitor. Commands end with ; or g.

Your MySQL connection id is 4

Server version: 5.1.34-ndb-7.0.6-cluster-gpl-log MySQL Cluster Server (GPL)

Type 'help;' or 'h' for help. Type 'c' to clear the current input statement.

mysql> CHANGE MASTER TO

-> MASTER_HOST='192.168.0.3',

-> MASTER_PORT=3306,

-> MASTER_USER='repl',

-> MASTER_PASSWORD='repl',

-> MASTER_LOG_FILE='',

-> MASTER_LOG_POS=4;

090803 17:25:56 [Warning] Neither --relay-log nor --relay-log-index were used; so replication may break when this MySQL server acts as a slave and has his hostname changed!! Please use '--relay-log=ws2-relay-bin' to avoid this problem.

Query OK, 0 rows affected (0.01 sec)

mysql> start slave;

090803 17:27:25 [Note] Slave SQL thread initialized, starting replication in log 'FIRST' at position 0, relay log './ws2-relay-bin.000001' position: 4

Query OK, 0 rows affected (0.00 sec)

090803 17:27:25 [Note] Slave I/O thread: connected to master 'repl@192.168.0.3:3306',replication started in log 'FIRST' at position 4

This time, to make sure that replication is working in both directions, I make changes to both Clusters and then check that they appear at the other site:

[billy@ws1 7_0_6]$ mysql -u root

Welcome to the MySQL monitor. Commands end with ; or g.

Your MySQL connection id is 8

Server version: 5.1.34-ndb-7.0.6-cluster-gpl-log MySQL Cluster Server (GPL)

Type 'help;' or 'h' for help. Type 'c' to clear the current input statement.

mysql> use test;

Database changed

mysql> create table numbers (num1 int, num2 int) engine=ndb;

090803 17:32:10 [Note] NDB Binlog: CREATE TABLE Event: REPL$test/numbers

090803 17:32:10 [Note] NDB Binlog: logging ./test/numbers (UPDATED,USE_WRITE)

Query OK, 0 rows affected (0.62 sec)

mysql> insert into numbers values (1,10),(2,20),(3,30);

Query OK, 3 rows affected (0.04 sec)

Records: 3 Duplicates: 0 Warnings: 0

billy@ws2 7_0_6]$ mysql -u root

Welcome to the MySQL monitor. Commands end with ; or g.

Your MySQL connection id is 8

Server version: 5.1.34-ndb-7.0.6-cluster-gpl-log MySQL Cluster Server (GPL)

Type 'help;' or 'h' for help. Type 'c' to clear the current input statement.

mysql> use test

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

mysql> select * from numbers;

+------+------+

| num1 | num2 |

+------+------+

| 2 | 20 |

| 3 | 30 |

| 1 | 10 |

+------+------+

3 rows in set (0.01 sec)

mysql> insert into numbers values (4,40),(5,50),(6,60);

Query OK, 3 rows affected (0.00 sec)

Records: 3 Duplicates: 0 Warnings: 0

[billy@ws1 7_0_6]$ mysql -u root

Welcome to the MySQL monitor. Commands end with ; or g.

Your MySQL connection id is 9

Server version: 5.1.34-ndb-7.0.6-cluster-gpl-log MySQL Cluster Server (GPL)

Type 'help;' or 'h' for help. Type 'c' to clear the current input statement.

mysql> use test

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

mysql> select * from numbers;

+------+------+

| num1 | num2 |

+------+------+

| 1 | 10 |

| 6 | 60 |

| 3 | 30 |

| 5 | 50 |

| 2 | 20 |

| 4 | 40 |

+------+------+

6 rows in set (0.02 sec)