MySQL Cluster running on Raspberry Pi

I start a long weekend tonight and it’s the kids’ last day of school before their school holidays and so last night felt like the right time to play a bit. This week I received my Raspberry Pi – if you haven’t heard of it then you should take a look at the Raspberry Pi FAQ – basically it’s a ridiculously cheap ($25 or $35 if you want the top of the range model) ARM based PC that’s the size of a credit card.

A knew I had to have one to play with but what to do with it? Why not start by porting MySQL Cluster onto it? We always claim that Cluster runs on commodity hardware – surely this would be the ultimate test of that claim.

I chose the customised version of Debian – you have to copy it onto the SD memory card that acts as the storage for the Pi. Once up and running on the Pi, the first step was to increase the size of the main storage partition – it starts at about 2 Gbytes – using gparted. I then had to compile MySQL Cluster – ARM isn’t a supported platform and so there are no pre-built binaries. I needed to install a couple of packages before I could get very far:

sudo apt-get update

sudo apt-get install cmake

sudo apt-get install libncurses5-dev

Compilation initially got about 80% through before failing and so if you try this yourself then save yourself some time by applying the patch from this bug report before starting. The build scripts wouldn’t work but I was able to just run make…

make

sudo make install

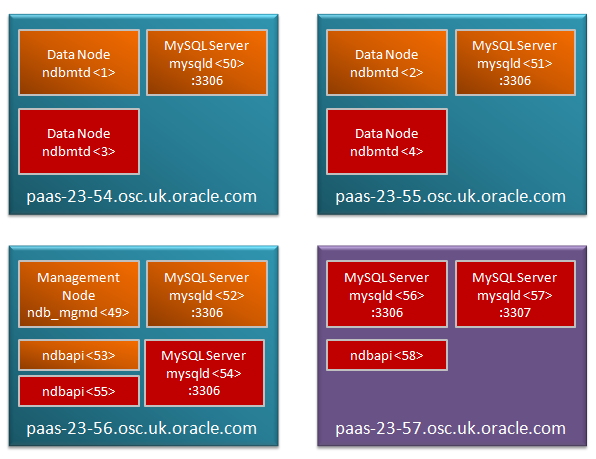

As I knew that memory was tight I tried to come up with a config.ini file that cut down on how much memory would be needed (note that 192.168.1.122 is the Raspberry Pi while 192.168.1.118 is an 8GByte Linux x86-64 PC – doesn’t seem a very fair match!):

[ndb_mgmd]

hostname=192.168.1.122

NodeId=1

[ndbd default]

noofreplicas=2

DataMemory=2M

IndexMemory=1M

DiskPageBufferMemory=4M

StringMemory=5

MaxNoOfConcurrentOperations=1K

MaxNoOfConcurrentTransactions=500

SharedGlobalMemory=500K

LongMessageBuffer=512K

MaxParallelScansPerFragment=16

MaxNoOfAttributes=100

MaxNoOfTables=20

MaxNoOfOrderedIndexes=20

[ndbd]

hostname=192.168.1.122

datadir=/home/pi/mysql/ndb_data

NodeId=3

[ndbd]

hostname=192.168.1.118

datadir=/home/billy/my_cluster/ndbd_data

NodeId=4

[mysqld]

NodeId=50

[mysqld]

NodeId=51

[mysqld]

NodeId=52

[mysqld]

NodeId=53

[mysqld]

NodeId=54

Running the management node worked pretty easily but then I had problems starting the data nodes – checking how much memory I had available gave me a hint as to why!

pi@raspberrypi:~$ free -m

total used free shared buffers cached

Mem: 186 29 157 0 1 11

-/+ buffers/cache: 16 169

Swap: 0 0 0

OK – so 157 Mbytes of memory available and no swap space, not ideal and so the next step was to use gparted again to create swap partitions on the SD card as well a massive 1Gbyte on my MySQL branded USB stick (need to persuade marketing to be a bit more generous with those). A quick edit of /etc/fstab and a restart and things were looking in better shape:

pi@raspberrypi:~$ free -m

total used free shared buffers cached

Mem: 186 29 157 0 1 11

-/+ buffers/cache: 16 169

Swap: 1981 0 1981

Next to start up the management node and 1 data node on the Pi as well as a second data node on the Linux server “ws2” (I want High Availability after all – OK so running the management node on the same host as a data node is a single point of failure)…

pi@raspberrypi:~/mysql$ ndb_mgmd -f conf/config.ini --configdir=/home/pi/mysql/conf/ --initial

pi@raspberrypi:~/mysql$ ndbd

billy@ws2:~$ ndbd -c 192.168.1.122:1186

I could then confirm that everything was up and running:

pi@raspberrypi:~$ ndb_mgm -e show

Connected to Management Server at: localhost:1186

Cluster Configuration

---------------------

[ndbd(NDB)] 2 node(s)

id=3 @192.168.1.122 (mysql-5.5.22 ndb-7.2.6, Nodegroup: 0, Master)

id=4 @192.168.1.118 (mysql-5.5.22 ndb-7.2.6, Nodegroup: 0)

[ndb_mgmd(MGM)] 1 node(s)

id=1 @192.168.1.122 (mysql-5.5.22 ndb-7.2.6)

[mysqld(API)] 5 node(s)

id=50 (not connected, accepting connect from any host)

id=51 (not connected, accepting connect from any host)

id=52 (not connected, accepting connect from any host)

id=53 (not connected, accepting connect from any host)

id=54 (not connected, accepting connect from any host)

Cool!

Next step is to run a MySQL Server so that I can actually test the Cluster – if I tried running that on the Pi then it caused problems (157 Mbytes of RAM doesn’t stretch as far as it used to) – on ws2:

billy@ws2:~/my_cluster$ cat conf/my.cnf

[mysqld]

ndbcluster

datadir=/home/billy/my_cluster/mysqld_data

ndb-connectstring=192.168.1.122:1186

billy@ws2:~/my_cluster$ mysqld --defaults-file=conf/my.cnf&

Check that it really has connected to the Cluster:

pi@raspberrypi:~/mysql$ ndb_mgm -e show

Connected to Management Server at: localhost:1186

Cluster Configuration

---------------------

[ndbd(NDB)] 2 node(s)

id=3 @192.168.1.122 (mysql-5.5.22 ndb-7.2.6, Nodegroup: 0, Master)

id=4 @192.168.1.118 (mysql-5.5.22 ndb-7.2.6, Nodegroup: 0)

[ndb_mgmd(MGM)] 1 node(s)

id=1 @192.168.1.122 (mysql-5.5.22 ndb-7.2.6)

[mysqld(API)] 5 node(s)

id=50 @192.168.1.118 (mysql-5.5.22 ndb-7.2.6)

id=51 (not connected, accepting connect from any host)

id=52 (not connected, accepting connect from any host)

id=53 (not connected, accepting connect from any host)

id=54 (not connected, accepting connect from any host)

Finally, just need to check that I can read and write data…

billy@ws2:~/my_cluster$ mysql -h 127.0.0.1 -P3306 -u root

mysql> CREATE DATABASE clusterdb;USE clusterdb;

Query OK, 1 row affected (0.24 sec)

Database changed

mysql> CREATE TABLE simples (id INT NOT NULL PRIMARY KEY) engine=ndb;

120601 13:30:20 [Note] NDB Binlog: CREATE TABLE Event: REPL$clusterdb/simples

Query OK, 0 rows affected (10.13 sec)

mysql> REPLACE INTO simples VALUES (1),(2),(3),(4);

Query OK, 4 rows affected (0.04 sec)

Records: 4 Duplicates: 0 Warnings: 0

mysql> SELECT * FROM simples;

+----+

| id |

+----+

| 1 |

| 2 |

| 4 |

| 3 |

+----+

4 rows in set (0.09 sec)

OK – so is there any real application to this? Well, probably not other than providing a cheap development environment – imagine scaling out to 48 data nodes, that would cost $1,680 (+ the cost of some SD cards)! More practically might be management nodes – we know that they need very few resources. As a reminder – this is not a supported platform!